Introduction to Balanced Box-Decomposition Trees

Stardate 96893.29.

You are the USS Euler’s Science Officer at a moment when the computer graphical displays and voice systems went down. You only have enough deuterium for a short travel and need to find the nearest star system. This is not a simple matter of looking at a chart. You have multiple dimensions in which you can travel. In a bid for galactic peace, the Federation mandated that both Emacs and Vim should be installed in all computers. You open your favourite editor and, fortunately, know exactly how to formulate the solution to your problem: a $d$-dimensional nearest neighbour algorithm.

Given a dataset $\mathcal{D}$ of $n$ points in a space $X$ we want to be able to tell which are the closest point to a query point $q \in X$, preferably in a way which is computationally cheaper than brute force methods (e.g. iterating through all of the points) which typically solve this problem in $\mathcal{O}(dn)$ 1.

$X$ could have $d$ dimensions (that is $\mathcal{D} \subset X : \mathbb{R}^d$) and we define closest using2 Minkowski distance metrics, that is:

$$ L_m = \left(\sum_{i=1}^d |p_i - q_i|^m\right)^{\frac{1}{m}},\qquad p,q \in X : \mathbb{R}^d. $$

A potential solution for this problem would be to use kd-trees, which for low dimension scenarios provide $\mathcal{O}(\log n)$ query times 3. However, as the number of dimensions increase (as quickly as $d>2$) the query times also increase as $2^d$.

The case can be made then for approximate nearest neighbour (NN) algorithms and that’s precisely what we will discuss here, namely the Balanced Box-Decomposition Tree (BBD, 1). The definition of approximate NN for a query point $q$ can be given as

$$ \text{dist}(p, q) \leq (1+\epsilon)\text{dist}(p^{\star},q),\qquad \epsilon > 0, $$

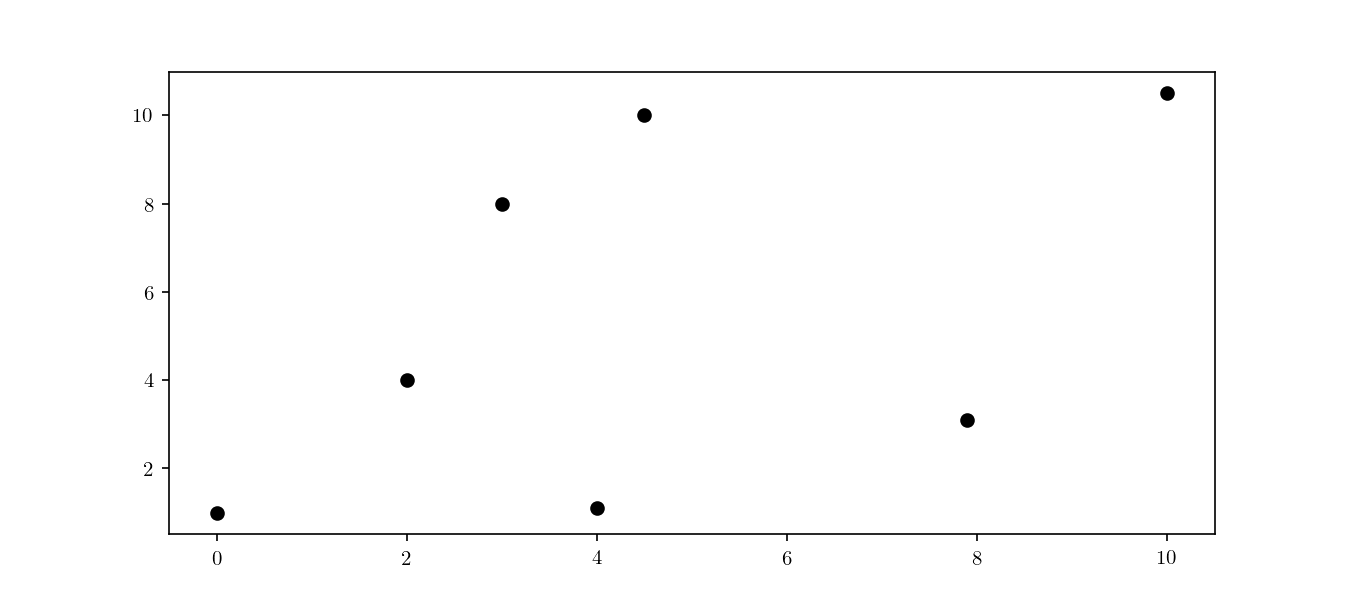

where $p$ is the approximate NN and $p^{\star}$ is the true NN. Let’s consider, for the sake of visualisation, a small two dimensional dataset $\mathcal{D} \to \mathbb{R}^2$ as shown in Figure 1.

Space decomposition

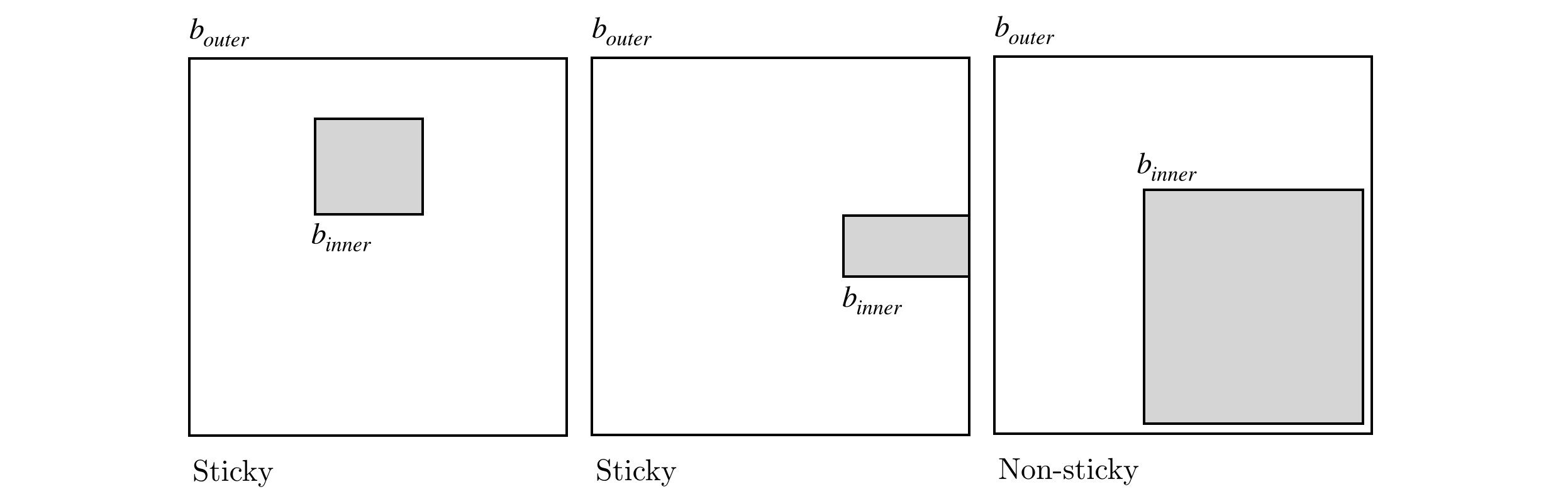

BBD trees belong to the category of hierarchical space decomposition trees. In BBD trees, specifically, space is divided in $d$-dimensional rectangles and cells. Cells can either represent another $d$-dimensional rectangle or the intersection of two rectangles (one, the outer box fully enclosing the other, the inner box). Another important distinction of BBD trees is that rectangle’s size (in this context, the largest length in all of the $d$ dimensions) is bounded by a constant value. The space decomposition must follow an additional rule which is boxes must be sticky. If we consider a inner box $[x_{inner}, y_{inner}]$ contained in a outer box $[x_{outer}, y_{outer}]$, such that

$$ [x_{inner}, y_{inner}] \subseteq [x_{outer}, y_{outer}], $$

then, considering $w = y_{inner} - x_{inner}$, the box is considered sticky if either

$$ \begin{aligned} x_{inner}-x_{outer} = 0 &\lor x_{inner}-x_{outer} \nleq w \\ y_{outer}-y_{inner} = 0 &\lor y_{outer}-y_{inner} \nleq w. \end{aligned} $$

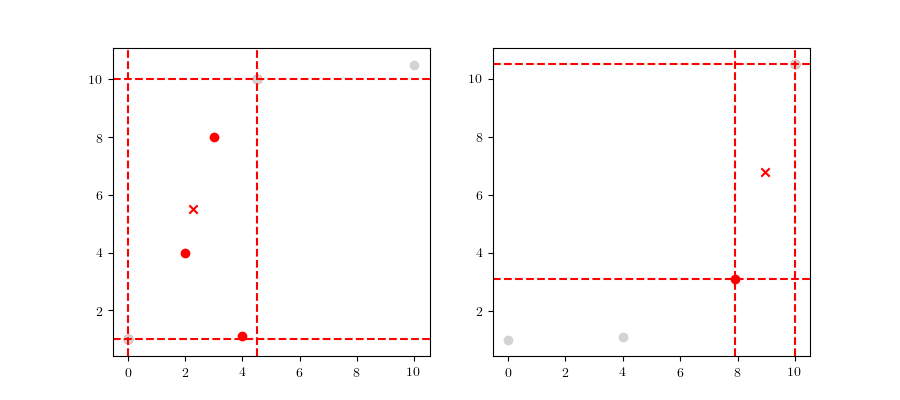

An illustration of the stickiness concept can viewed in the diagram below.

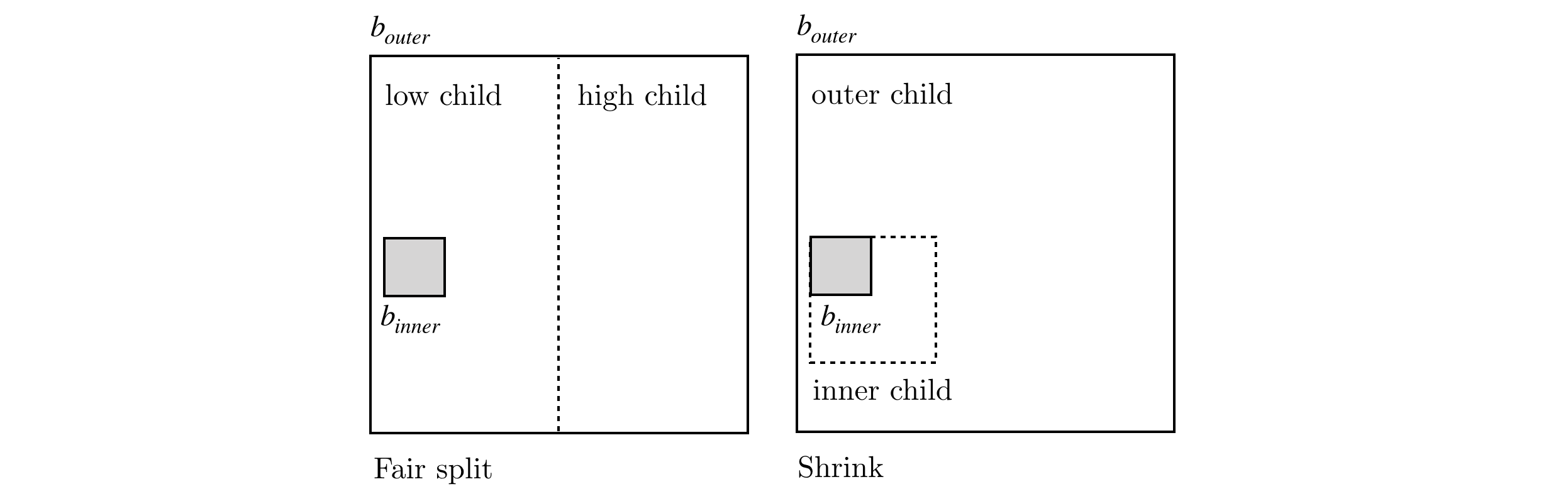

Stickiness provides some important geometric properties to the space decomposition which will be discussed further on. The actual process of space decomposition will produce a tree of nodes, each with an associated $d$-dimensional rectangle enclosing a set of points. Each node will be further decomposed into children nodes, containing a region of space with a subset of the parent’s data points. If a node has no children it will be called a leaf node. The division process can occur either by means of:

- a fair split, this is done by partitioning the space with an hyperplane, resulting in a low and high children nodes

- a shrink, splitting the box into a inner box (the inner child) and a outer box (the outer child).

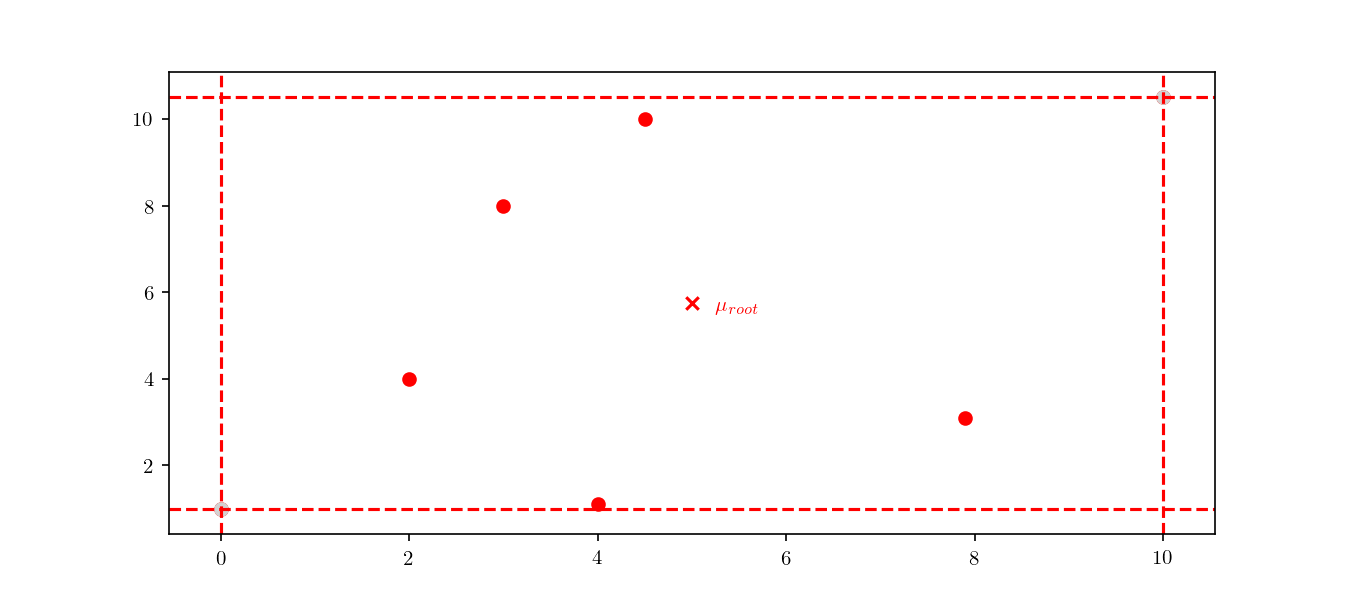

The initial node of the tree, the root node, will include all the dataset points, $\mathcal{D}$. In the Figure 4 we can see a representation of the root node for the dataset presented above. We can see the node boundaries in dashed red lines as well as the node’s center, marked as $\mu_{root}$.

The actual method to calculate the division can either be based on the midpoint algorithm or the middle interval algorithm. The method used for these examples is the latter, for which more details can be found in 4. The next step is to divide the space according to the previously mentioned rules. As an example, we can see the root node’s respective children in Figure 5.

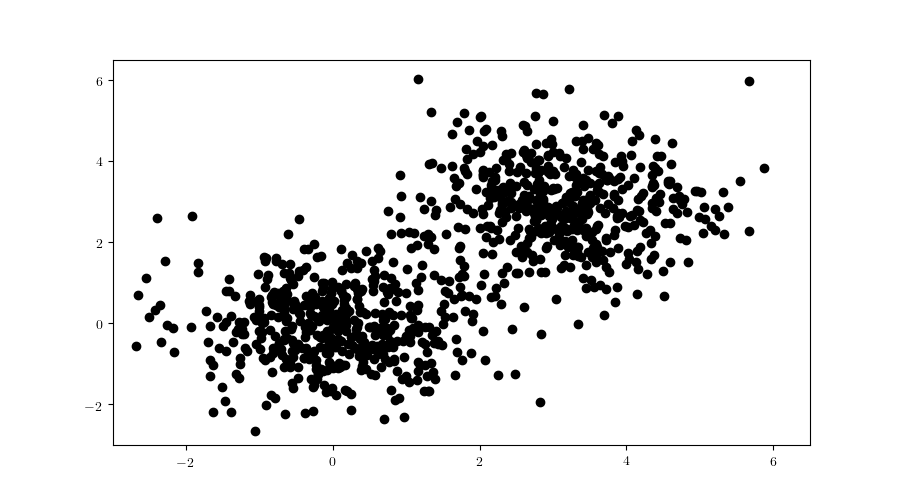

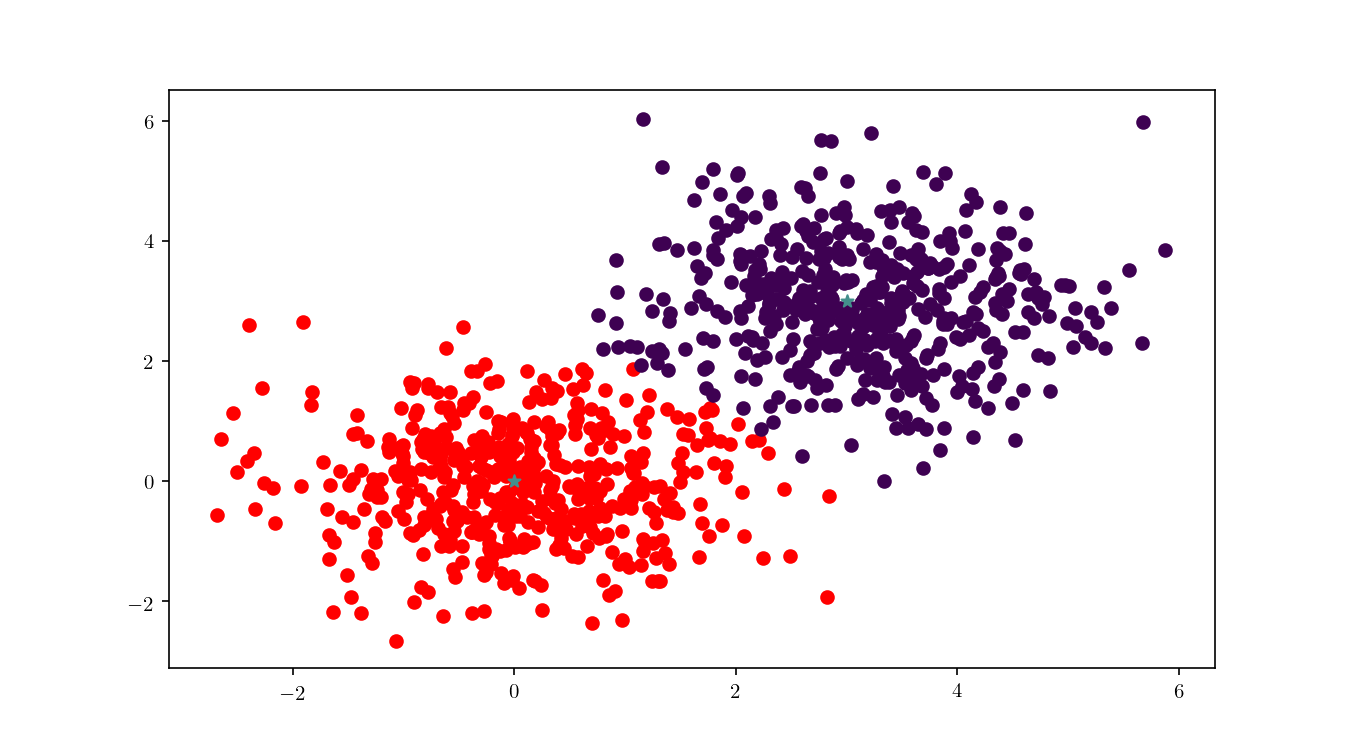

This process is repeated until the child nodes are leaves and cannot be divided anymore. To better visualise the construction process it would be helpful to have a larger tree, so we will now consider still the 2-dimensional case, but now with a larger dataset (Figure 6), consisting of 2000 samples in total, each half from a bivariate Gaussian distribution:

$$ \begin{aligned} \text{X}_1 &\sim \mathcal{N}([0,0], \mathbf{I}) \\ \text{X}_2 &\sim \mathcal{N}([3, 3], \mathbf{I}). \end{aligned} $$

With this larger dataset, we have enough points to illustrate the tree node building. This time, we will start from the root node and always follow either the “lower” nodes or the “upper” nodes (as show in Figure 7). We can clearly see the cells getting smaller, until finally we have a single point included (i.e. a leaf node).

This division process illustrates an important property of BBD-trees. Although other space decomposition algorithms (such as kd-trees) display a geometric reduction of number of points enclosed in each cell, methods such as the BBD-tree, which impose constraints on the cell’s size aspect ratio as stated before, display not only a geometric reduction in the number of points, but also in the cell’s size as well. The construction cost of a BBD-tree is $\mathcal{O}(dn \log n)$ and the tree itself will have $\mathcal{O}(n)$ nodes and $\mathcal{O}(\log n)$ height.

Tree querying

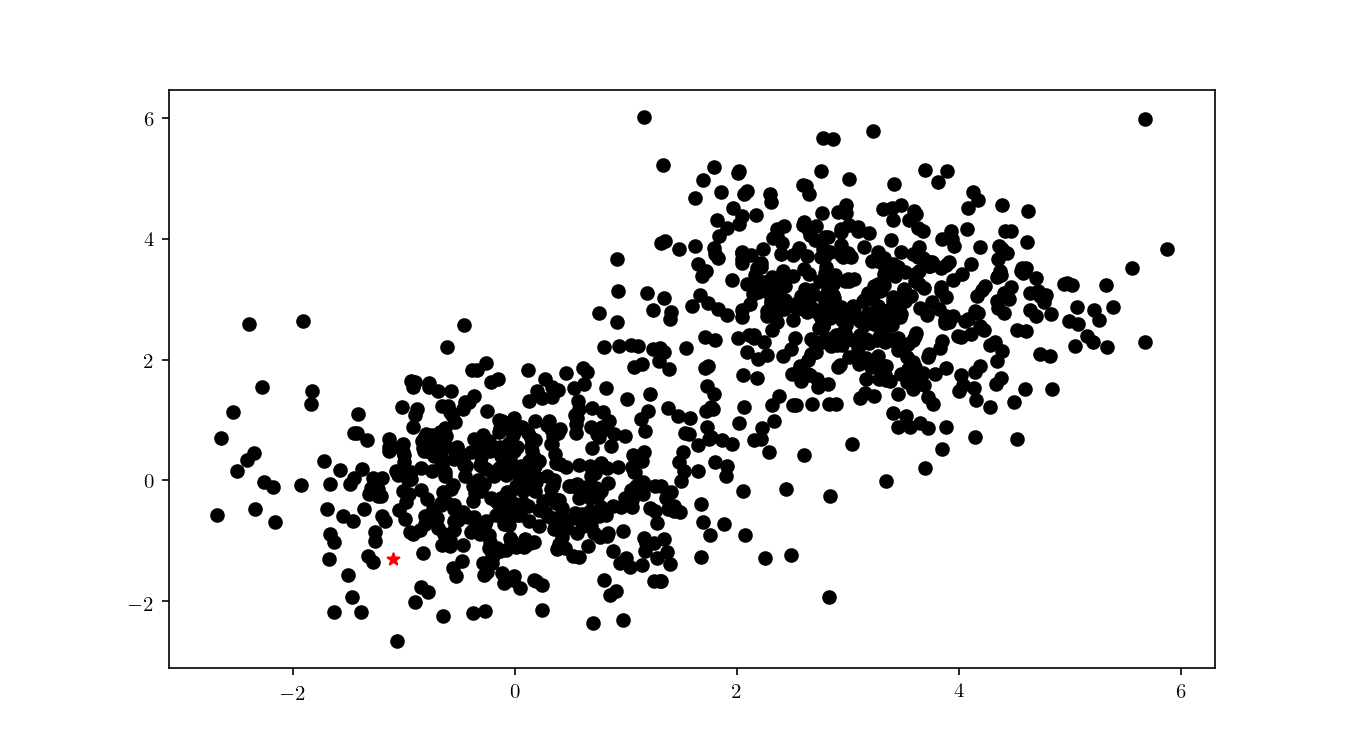

Now that we have successfully constructed a BBD-tree, we want to actually find the (approximate) nearest neighbour of an arbitrary query point $q$ (Figure 8).

The first step consists in descending the tree in order to locate the smallest cell containing the query point $q$. This process is illustrated for the bivariate data in Figure 9.

Once the cell has been located, we proceed to enumerate all the leaf nodes contained by it and calculate our distance metric $L_2$ in this case) between the query point $q$ and the leaf nodes, eventually declaring the point with the smallest $L_2$ as the aproximate NN. BBD-trees provide strong guarantees that the ANN will be located within this cell and not in a neighbouring cell. In Figure 10 we zoomed in the smallest cell containing $q$ and show the associated calculated $L_2$ distance for each node.

An important property of BBD-trees is that the tree structure does not need to be recalculated if we change either $\epsilon$ or if we decide to use another $L_m$ distance metric 1. The query time for a point $q$ in a BBD-tree is $\mathcal{O}(\log n)$. For comparison, if you recall, the query time for a brute force method is typically $\mathcal{O}(dn)$.

Filtering and k-NN

Great. Now that you solved the USS Euler’s problem, you want to make a suggestion to the federation. Where to place several star-bases and how to divide the system’s coverage between them. An immediate generalisation of this method is easily applicable to the problem of clustering. Note that, at the moment, we are not concerned with determining the “best” clusters for our data5. Given a set of points $Z = {z_1, z_2, \dots, z_n}$, we are concerned now in partitioning the data in clusters centred in each of the $Z$ points. A way of looking at this, is that we are building, for each point $z_n$ a Voronoi cell $V(z_n)$. This is achieved by a method called filtering. Filtering, in general terms, works by walking the tree with the list of candidate centres ($Z$) and pruning points from the candidate list as we move down. We will denote an arbitrary node as $n$, $z^{\star}_w$ and $n_w$ respectively as the candidate and the node weight, $z^{\star}_n$ and $n_n$ as the candidate and node count. The algorithm steps, as detailed in 6, are detailed below:

- Filter($n$, $Z$) {

- $C \leftarrow n.cell$

- if ($n$ is a leaf) {

- $z^{\star} \leftarrow$ the closest point in $Z$ to $n.point$

- $z^{\star}_w \leftarrow z^{\star}_w + n.point$

- $z^{\star}_n \leftarrow z^{\star}_n + 1\qquad$

- } *else {

- $z^{\star} \leftarrow$ the closest point in $Z$ to $C$’s midpoint

- for each ($z \in Z \setminus {z^{\star}}$) {

- if ($z.isFarther(z^{\star},C)$) {

- $Z \leftarrow Z \setminus {z}$

- }

- if ($|Z|=1$) {

- $z^{\star}_w \leftarrow z^{\star}_w + n_w$

- $z^{\star}_n \leftarrow z^{\star}_n + n_n$

- } else {

- Filter($n_{left}, Z$)

- Filter($n_{right}, Z$)

- } }

To illustrate the assignment of data points to the centres, we will consider the previous bivariate Gaussian data along with two centres, $z_1 = {0,0}$ and $z_2 = {3, 3}$. Figure 11 shows the process of splitting the dataset $\mathcal{D}$ into two clusters, namely the subsets of data points closer to $z_1$ or $z_2$.

We can see in Figure 12 the final cluster assignment of the data points. With a $\mathbb{R}^2$ dataset and only two centres the organisation of points follows a simple perpendicular bisection of the segment connecting the centres, as expected.

In Figure 13 we can see more clearly the dataset clusters changing when center $z_1$ is moving around the plane. BBD-trees can play an important role in improving $k$-means performance, as described in 6.

This concludes a (short) introduction to BBD-trees, I hope you enjoyed it. If you have any comments or suggestions, please let me know at Mastodon.

Arya, S., Mount, D. M., Netanyahu, N. S., Silverman, R., & Wu, A. Y. (1998). An optimal algorithm for approximate nearest neighbor searching fixed dimensions. Journal of the ACM. https://doi.org/10.1145/293347.293348 ↩︎ ↩︎ ↩︎

The $L_m$ distance may be pre-computed in this method to avoid recalculation for each query. ↩︎

Friedman, J. H., & Bentley, J. L. (1977). RA Finkel. An algorithm for finding best matches in logarithmic expected time. ACM Transactions on Mathematical Software, 3(3), 209-226. ↩︎

Callahan, P. B., & Kosaraju, S. R. (1995). A decomposition of multidimensional point sets with applications to k-nearest-neighbors and n-body potential fields. Journal of the ACM, 42(1), 67-90. ↩︎

This would be a k-means problem. I intend to write a blog post on k-means clustering (and the role BBD-trees can play) in the future. ↩︎

Kanungo, T., Mount, D. M., Netanyahu, N. S., Piatko, C. D., Silverman, R., & Wu, A. Y. (2002). An efficient k-means clustering algorithms: Analysis and implementation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(7), 881–892. https://doi.org/10.1109/TPAMI.2002.1017616 ↩︎ ↩︎